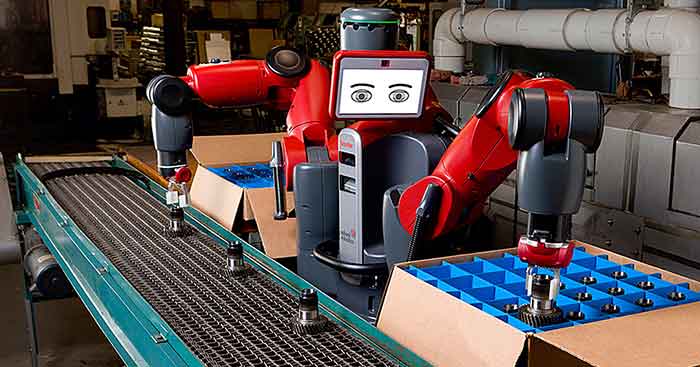

Robots are probably the first thing you think of when asked to imagine AI applied to industrials and manufacturing. Indeed many innovative companies like Rodney Brooks’ Rethink Robotics have developed friendly-looking robot factory workers who hustle alongside their human colleagues. Industrial robots have historically been designed to perform specific niche tasks, but modern-day robots can be taught new tasks and make real-time decisions.

As sexy and shiny as robots are, the vast majority of the value of AI in manufacturing lies in transforming data from sensors and routine hardware into intelligent predictions for better and faster decision-making. 15 billion machines are currently connected to the Internet. By 2020, Cisco predicts the number will surpass 50 billion. Connecting these machines together into intelligent automated systems in the cloud is the next major step in the evolution of manufacturing and industry.

In 2015, General Electric launched GE Digital to drive software innovation across all departments. Harel Kodesh, CTO of GE Digital, shares with us the unique challenges of applying AI to industrials that differ from consumer applications.

Why Is Industrial AI So Much Harder Than Consumer AI?

1. Industrial Data Is Often Inaccurate

“For machine learning to work properly, you need lots of data. Consumer data is harder to misunderstand, for example when you buy a pizza or click on an ad,” says Kodesh. “When looking at the industrial internet, however, 40% of the data coming in is spurious and isn’t useful”.

Let’s say you need to calculate how far a combine needs to drill and you stick a moisture sensor into the ground to take important measurements. The readings can be skewed by extreme temperatures, accidental man-handling, hardware malfunctions, or even a worm that’s been accidentally skewered by the device. “We are not generating data from the comfort and safety of a computer in your den,” Kodesh emphasizes.

2. AI Runs On The Edge, Not On The Cloud

Consumer data is processed in the cloud on computing clusters with seemingly infinite capacity. Amazon can luxuriously take their time to crunch your browsing and purchase history and show you new recommendations. “In consumer predictions, there’s low value to false negatives and to false positives. You’ll forget that Amazon recommended you a crappy book,” Kodesh notes.

On a deep sea oil rig, a riser is a conduit which transports oil from subsea wells to a surface facility. If a problem arises, several clamps must respond immediately to shut the valve. The sophisticated software that manages the actuators on those clamps tracks minute details in temperature and pressure. Any mistake could mean disaster.

The stakes and responsiveness are much higher for industrial applications where millions of dollars and human lives can be on the line. In these cases, industrial features cannot be trusted to run on the cloud and must be implemented on location, also known as “the edge.”

Industrial AI is built as an end-to-end system, described by Kodesh as a “round-trip ticket”, where data is generated by sensors on the edge, served to algorithms, modeled on the cloud, and then moved back to the edge for implementation. Between the edge and the cloud are supervisor gateways and multiple nodes of computer storage since the entire system must be able to run the the right load at the right places.

In a manufacturing facility that crushes ores into platinum bars, bars that come out with the wrong consistency must be immediately detected in order to adjust the pressure at the beginning. Any delay means wasted material. Similarly, a wind turbine is constantly ingesting data to control operations. Kodesh highlights one of many possible malfunctions: “the millionth byte might be the torque on the blade, but if the torque is too high, the turbine blades will fall off. We need to serve this critical information first even if it’s in the millionth place in the queue.”

Serving the correct data in real-time is a task so challenging that GE must rely on custom, in-house solutions. “Spark is fast,” admits Kodesh, “but when you make decisions in 10 milliseconds, you need different solutions.”

3. A Single Prediction Can Cost Over $1,000

Despite the high volume of faulty data and limited processing power at the edge, industrial AI still needs to be incredibly accurate. If an analytical system on a plane determines an engine is faulty, specialist technicians and engineers must be dispatched to remove and repair the faulty part. Simultaneously a loaner engine must be provided so the airline can keep up flight operations. The entire deal can easily surpass $200,000.

“We’re not going to call and tell you there is a problem where there isn’t, and we’re definitely not going to tell you there isn’t a problem when there is,” says Kodesh. “We want to make sure we have very high fidelity systems”.

According to Kodesh, the only way to ensure such high fidelity and performance is to run thousands of algorithms at the same time. A consumer company like Amazon might make $1 to $9 on a book, so they’re only willing to spend $0.001 for each user prediction. With hundreds of thousands of dollars at stake, Industrial and manufacturing giants spend between $40-$1,000 for each prediction.

“For $1,000, I can run tons of algorithms in parallel, aggregate the results, and run something known as a genetic algorithm to grow the predictor,” Kodesh reveals. “This will create a survival of the fittest effect where the most fit predictors are used and less fit predictors are discarded.”

4. Complex Models Must Be Interpretable

Consumers rarely ask why Amazon makes specific recommendations. When the stakes are higher, people ask questions. Technicians who have been in the field for 45 years will not trust machines that cannot explain their predictions.

To achieve this high level of interpretability, GE needs to invent completely new technologies. Unfortunately, the talent required is painfully scarce. “I admire all the schools trying to match marketplace demand with new data scientists, but their mathematics are too shallow,” Kodesh complains.

“Real data scientists need more academic depth. They literally need to be rocket scientists who know how to filter and normalize millions of data points in real-time.”

Leave a Reply

You must be logged in to post a comment.