Are you hiring technical AI talent for your company? Post your openings on the TOPBOTS jobs board (go to jobs board) to reach thousands of engineers, data scientists, and researchers currently looking for work.

It’s one thing to practice NLP and another to crack interviews. Giving an interview for NLP role is very different from a generic data science profile.

In just a few years, the questions have changed completely because of transfer learning and new language models. I have personally experienced that NLP interviews are getting tough with time as we make more progress. Earlier, it was all about SGD, naive-bayes and LSTM, but now its more about LAMB, transformer and BERT.

This post is a small compilation of questions I encountered while giving interviews and hope to throw some light on important aspects of Modern NLP interview. I am focusing more on the happenings in NLP after the transformer architecture which also formed the majority of my questions during interviews.

These questions are so critical for evaluating NLP engineers that if you are not asked any of these, you might be interviewing with an outdated NLP team with less scope of doing complex work.

Do you find this in-depth technical education about NLP applications to be useful? Subscribe below to be updated when we release new relevant content.

Modern NLP Interview

What is perplexity? What is its place in NLP?

Perplexity is a way to express a degree of confusion a model has in predicting. More entropy = more confusion. Perplexity is used to evaluate language models in NLP. A good language model assigns a higher probability to the right prediction.

What is the problem with ReLu?

- Exploding gradient (Solved by gradient clipping).

- Dying ReLu — No learning if the activation is 0 (Solved by parametric relu).

- Mean and variance of activations is not 0 and 1. (Partially solved by subtracting around 0.5 from activation. Better explained in fast.ai videos).

What is the difference between learning latent features using SVD and getting embedding vectors using deep network?

SVD uses linear combination of inputs while a neural network uses non-linear combination.

What is the information in hidden and cell state of LSTM?

Hidden stores all the information till that time step and cell state stores particular information that might be needed in the future time step.

Number of parameters in an LSTM model with bias

4(𝑚h+h²+h) where 𝑚 is input vectors size and h is output vectors size a.k.a. hidden.

The point to see here is that mh dictates the model size as m>>h. Hence it’s important to have a small vocab.

Time complexity of LSTM

seq_length*hidden²

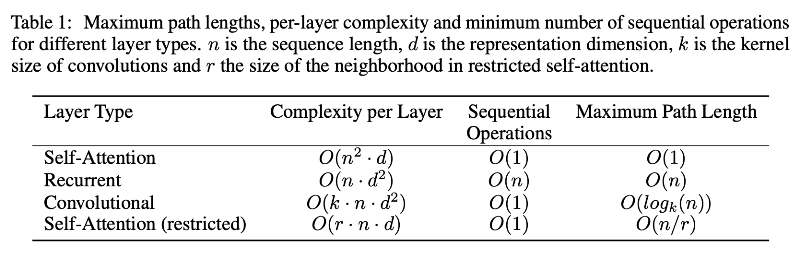

Time complexity of transformer

seq_length²*hidden

When hidden size is more than the seq_length (which is normally the case), transformer is faster than LSTM.

Why self-attention is awesome?

“In terms of computational complexity, self-attention layers are faster than recurrent layers when the sequence length n is smaller than the representation dimensionality d, which is most often the case with sentence representations used by state-of-the-art models in machine translations, such as word-piece and byte-pair representations.” — from Attention is all you need.

What are the limitation of Adam optimizer?

“While training with Adam helps in getting fast convergence, the resulting model will often have worse generalization performance than when training with SGD with momentum. Another issue is that even though Adam has adaptive learning rates its performance improves when using a good learning rate schedule. Especially early in the training, it is beneficial to use a lower learning rate to avoid divergence. This is because in the beginning, the model weights are random, and thus the resulting gradients are not very reliable. A learning rate that is too large might result in the model taking too large steps and not settling in on any decent weights. When the model overcomes these initial stability issues the learning rate can be increased to speed up convergence. This process is called learning rate warm-up, and one version of it is described in the paper Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour.” — from iprally.

How is AdamW different from Adam?

AdamW is Adam with L2 regularization on weight as models with smaller weights generalize better.

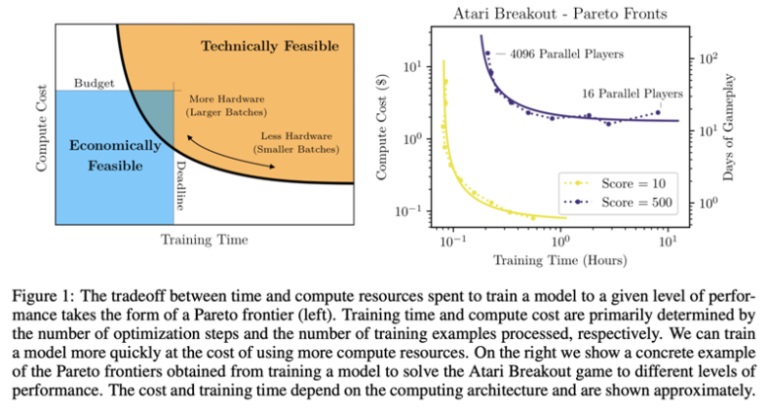

Can we train a model faster with large batch size?

Yes!

In this tweet of April 2018, Yann advised against large batch size.

Good news!

It wasn’t possible earlier but is now with new optimizers like LARS and LAMB. ALBERT is trained with LAMB with a batch size of 4096.

Do you like feature-extraction or fine tuning? How do you decide? Would you use BERT as a feature extractor or fine-tune it?

This is explained in detail in this post.

Give an example of strategy for scheduling learning rate?

Explain one cycle policy by Leslie Smith.

Should we do cross-validation in deep learning?

No.

The variance of cross-folds decrease as the samples size grows. Since we do deep learning only if we have samples in thousands, there is not much point in cross validation.

What is the difference between hard and soft parameter sharing in multi task learning?

In hard sharing, we train for all the task at once and update weight based on all the losses. In soft, we train for only one task at a time.

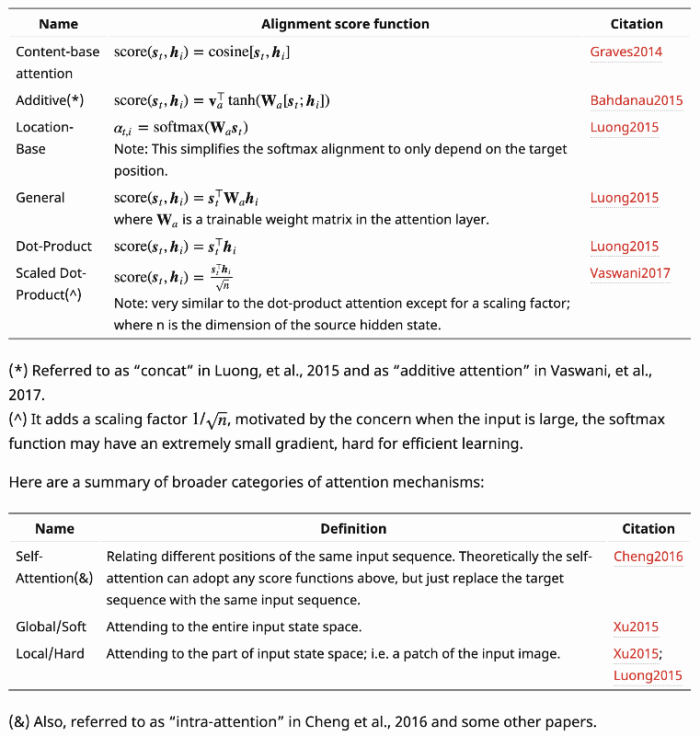

What are the different types of attention mechanism?

Difference between BatchNorm and LayerNorm?

BatchNorm — Compute the mean and var at each layer for every minibatch.

LayerNorm — Compute the mean and var for every single sample for each layer independently.

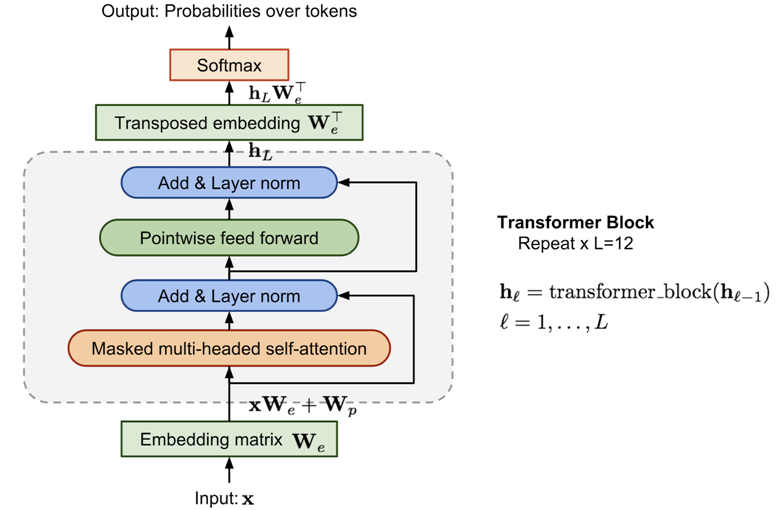

Why does the transformer block have LayerNorm instead of BatchNorm?

Looking at the advantages of LayerNorm, it is robust to batch size and works better as it works at the sample level and not batch level.

What changes would you make to your deep learning code if you knew there are errors in your training data?

We can do label smoothening where the smoothening value is based on % error. If any particular class has known error, we can also use class weights to modify the loss.

How would you choose a text encoder for a task? Which is your favourite text encoder and why?

This is a subjective question and you can read this to know more.

What are the tricks used in ULMFiT? (Not a great questions but checks the awareness)

- LM tuning with task text

- Weight dropout

- Discriminative learning rates for layers

- Gradual unfreezing of layers

- Slanted triangular learning rate schedule

This can be followed up with a question on explaining how they help.

Why transformer perform better than LSTM?

Get the explanation here.

Funny questions: Which is the most used layer in transformer?

Dropout

Trick question: Tell me a language model which doesn’t use dropout

ALBERT v2 — This throws a light on the fact that a lot of assumptions we take for granted are not necessarily true. The regularisation effect of parameter sharing in ALBERT is so strong that dropouts are not needed. (ALBERT v1 had dropouts.)

What are the differences between GPT and GPT-2? (From Lilian Weng)

- Layer normalization was moved to the input of each sub-block, similar to a residual unit of type “building block” (differently from the original type “bottleneck”, it has batch normalization applied before weight layers).

- An additional layer normalization was added after the final self-attention block.

- A modified initialization was constructed as a function of the model depth.

- The weights of residual layers were initially scaled by a factor of 1/√n where n is the number of residual layers.

- Use larger vocabulary size and context size.

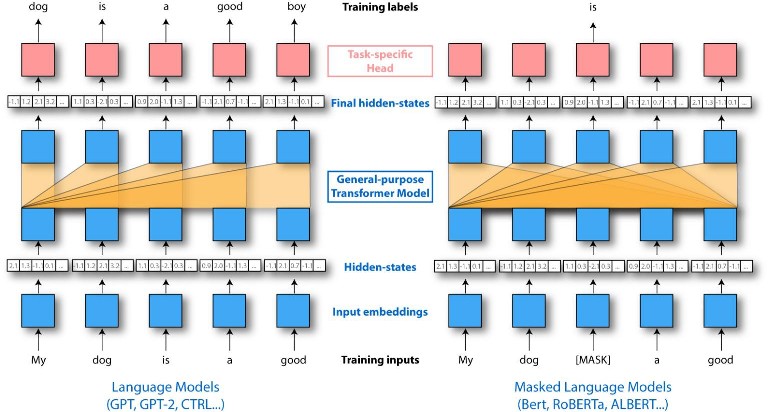

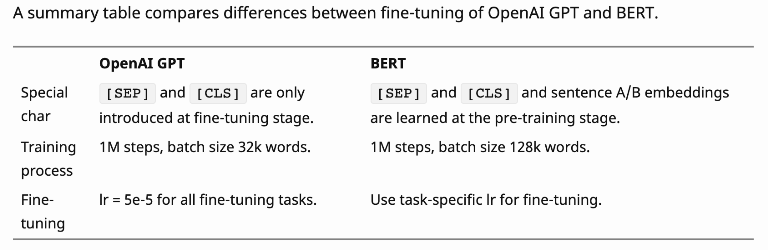

What are the differences between GPT and BERT?

- GPT is not bidirectional and has no concept of masking.

- BERT adds next sentence prediction task in training and so it also has a segment embedding.

What are the differences between BERT and ALBERT v2?

- Embedding matrix factorization (helps in reducing no. of parameters)

- No dropout

- Parameter sharing (helps in reducing no. of parameters and regularization)

How does parameter sharing in ALBERT affect the training and inference time?

No effect. Parameter sharing just decreases the number of parameters.

How would you reduce the inference time of a trained NN model?

- Serve on GPU/TPU/FPGA

- 16 bit quantisation and served on GPU with fp16 support

- Pruning to reduce parameters

- Knowledge distillation (To a smaller transformer model or simple neural network)

- Hierarchical softmax

- You can also cache results as explained here.

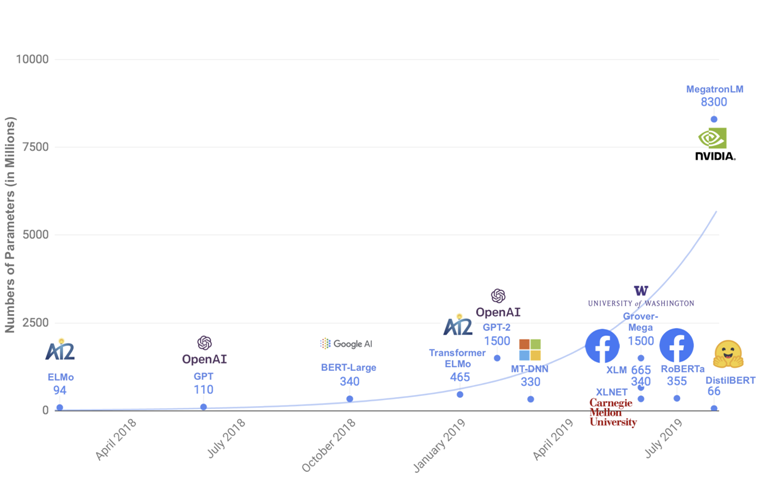

Given this chart, will you go with a transformer model or LSTM language model?

Would you use BPE with classical models?

Of course! BPE is a smart tokeniser and it can help us get a smaller vocabulary which can help us find a model with less parameters.

How would you make an arxiv papers search engine? (I was asked — How would you make a plagiarism detector?)

Get top k results with TF-IDF similarity and then rank results with

- semantic encoding + cosine similarity

- a model trained for ranking

Read more here.

How would you make a sentiment classifier?

This is a trick question. The interviewee can say all things such as using transfer learning and latest models but they need to talk about having a neutral class too otherwise you can have really good accuracy/f1 and still, the model will classify everything into positive or negative.

The truth is that a lot of news is neutral and so the training needs to have this class. The interviewee should also talk about how will he create a dataset and his training strategies like the selection of language model, language model fine-tuning and using various datasets for multi-task learning.

This article was originally published on Medium and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI and NLP updates.

We’ll let you know when we release more in-depth technical education.

Leave a Reply

You must be logged in to post a comment.