I recently collaborated on several projects involving chatbots and had the opportunity to discuss with industry experts about the main difficulties that are often encountered in this type of project. While it is becoming easier and easier to build conversational assistants, it looks like there are some problems that emerge systematically as the chatbot grows, as a consequence of not having a proper intent architecture.

Some symptoms of a bad intent architecture are:

- The chatbot often gets confused between two intents, which happen to have similar training phrases.

- The chatbot doesn’t seem to match what some users say with the correct intent even though it’s already implemented, and it’s cumbersome to extract better training phrases from the vast amount of collected conversational data.

- Even though the correct intent is matched, the chatbot fails to solve users’ problems, users give negative feedback and it’s not clear how to improve the service.

In this article, I propose a way of designing intents with the goal of avoiding these bad symptoms.

Chatbot inputs: free text and multiple-choice answers

I’ll deal primarily with chatbots whose input may be both free text or voice (and so intent classification is involved), and from multiple choice. There are two main advantages of accepting free text or voice in a chatbot:

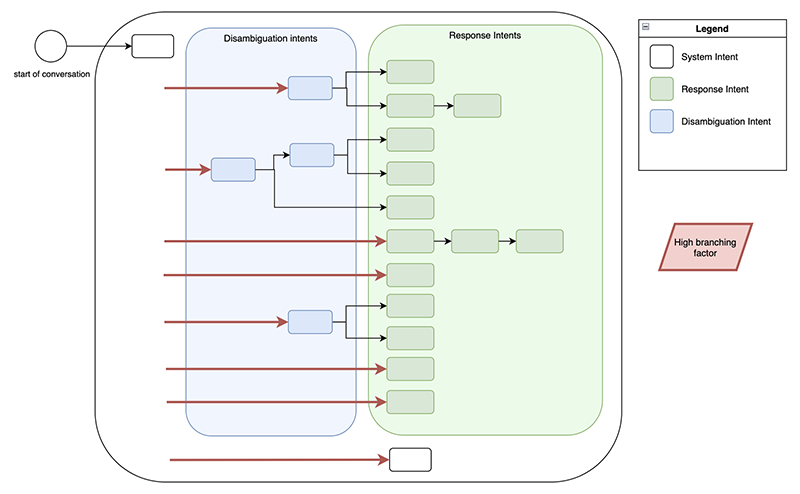

- The user can say what he/she wants and get a proper response, without having to navigate through a sequence of multiple-choice questions. This means that at the beginning of the conversation there is a very high branching factor, i.e., there is a high number of possible conversation paths that can be taken from a single step of the conversation. This can’t be achieved with multiple-choice-only chatbots, as the list of multiple choices would be too long to fit a single response, resulting in deeper conversational paths. However, as the branching factor increases, the probability that the chatbot will give a wrong answer also increases because there are more intents to choose from.

- We get to see what the user actually wants since he/she is free to write or speak. This means that we get data that we can analyze to improve the chatbot over time, without involving human agents.

However, multiple-choice inputs have important advantages over free text as well:

- Although the chatbot may not have understood exactly what the user wants, it can propose plausible alternatives in the form of multiple-choice answers. Proposing more possible solutions is much better than proposing only one, but wrong.

- It’s easier to understand the users’ feedback if they come from multiple-choice answers.

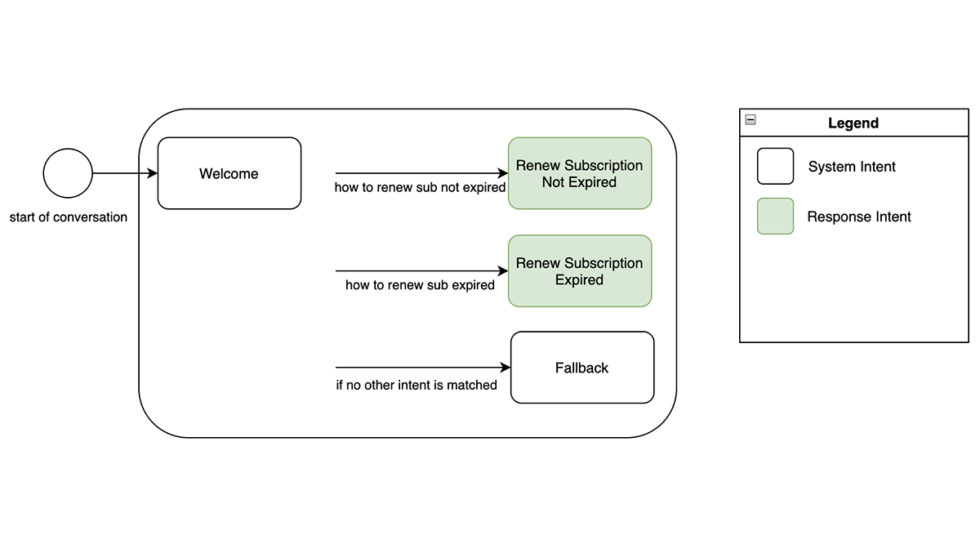

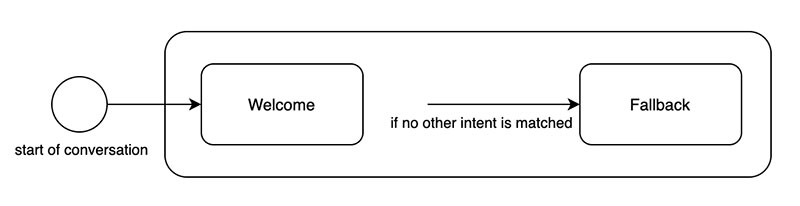

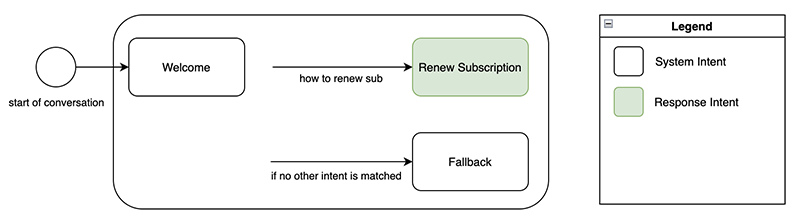

The good news is that we can use both input modes in the same chatbot, using the best one on the right occasion. Let’s see an example with a fresh new chatbot. The only intents will be the Welcome Intent, which contains a welcome message that the chatbot shows to the user when the conversation starts, and the Fallback Intent, which is matched when no other intent is matched upon a user’s request.

If this in-depth educational content is useful for you, subscribe to our AI research mailing list to be alerted when we release new material.

How to manage intents with similar training phrases

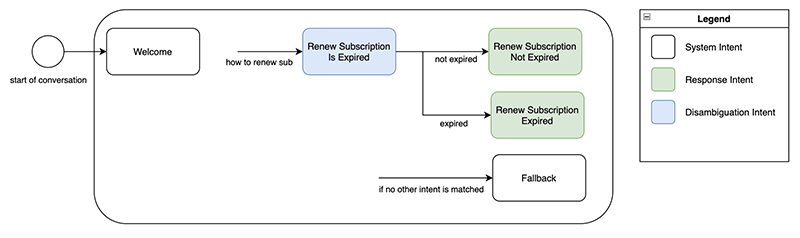

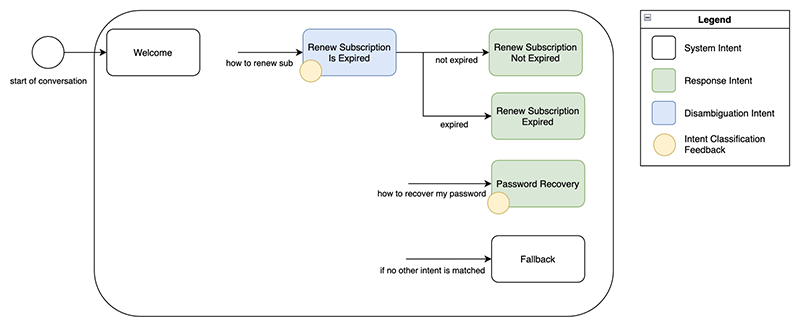

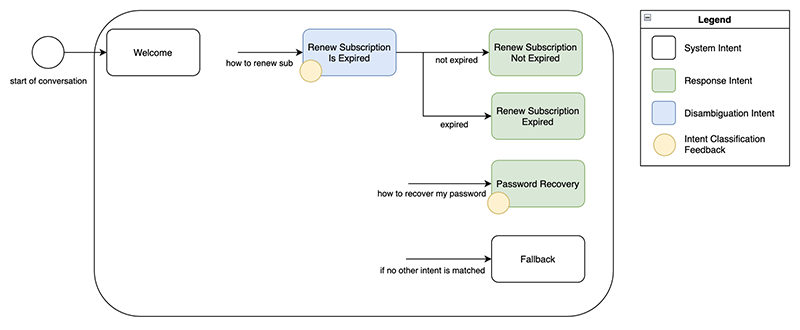

Suppose we implemented the Renew Subscription intent, which answers with “To renew your subscription, do this […]” to questions like “How can I renew my subscription?”. We can distinguish the intents in:

- System Intents: common intents that are always present in every chatbot, like the Welcome and Fallback intents.

- Response Intents: intents whose response should solve the user’s request.

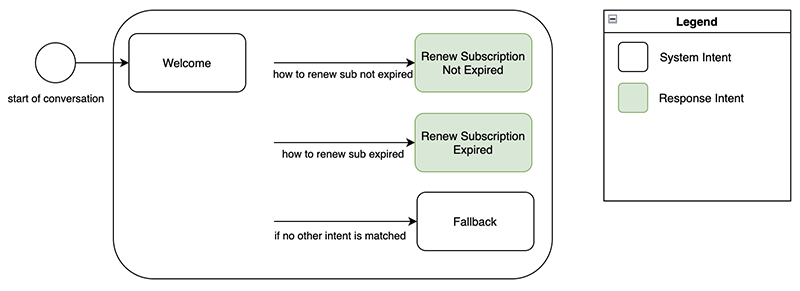

Later, we discover that there are two distinct renewal procedures: one in case the subscription has not expired yet, and one when the subscription has already expired. The naive solution would be to create a second intent and try to distinguish the training phrases of the two intents as much as possible. We create the Renew Subscription Expired intent, which answers with “To renew your subscription, do this […]” to questions like “How do I renew my expired subscription”. Then, we update the Renew Subscription Not Expired intent to answer with “To renew your subscription, do that […]” to questions like “How do I renew my not yet expired subscription”.

This may look ok at first sight. But do the users really specify in their questions whether their subscription is already expired? What the users want is to renew their subscription and the fact that the correct procedure depends on other factors does not mean that the intention of the users is different. A better way to manage this is to create an intent whose purpose is to disambiguate whether the user’s subscription is expired or not and lead to the correct response. Let’s call this new intent Renew Subscription Is Expired, which asks the user “Is your subscription expired yet?”. We should then move all the training phrases of the Response Intents we created before to this new intent.

This new intent is not a Response Intent because its response is not supposed to directly solve the user’s request. We can classify it as a Disambiguation Intent, whose purpose is to find the right response from a shortlist of similar options. Since its goal is very similar to the advantages of multiple-choice inputs, we can make it so that the user is provided a multiple-choice answer for it, like “Yes, my subscription is expired” and “No, my subscription is not expired”.

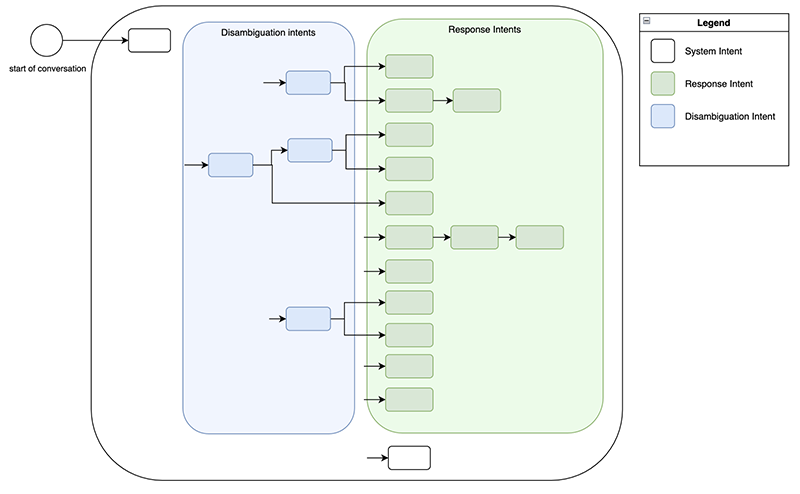

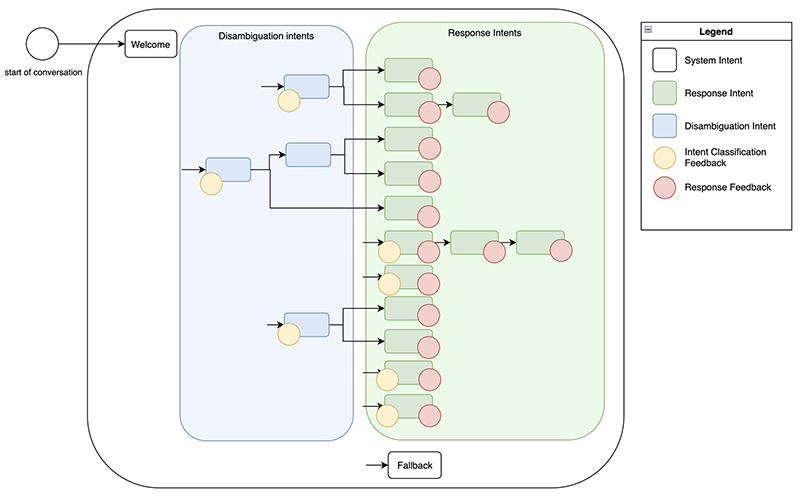

Great! Now the users won’t get wrong answers anymore because of intents with similar training phrases, at the cost of one more, but necessary, conversational step. Moreover, disambiguation can be done automatically if the system already knows whether the user’s subscription is expired or not. Once we add more intents to our chatbot, its intents architecture will look like this.

Multiple Disambiguation Intents can be chained, as well as multiple Response Intents. Note that conversational flow through multiple Disambiguation Intents always forks into several possible paths. This is not always true for multiple sequential Response Intents, because they may solve the user problem in multiple steps or collect necessary user data.

We have seen how to solve the problem of intents with similar training phrases with Disambiguation Intents. Let’s see now how to improve intent classification thanks to an appropriate feedback collection procedure.

How to structure feedback collection to improve intent classification

Our chatbot may still fail to deliver good customer support because of intents with training phrases that do not adequately cover the spectrum of possible requests made by users. This kind of problem is present primarily in the part of the conversational flow where we have a high branching factor, that is right after the conversation starts.

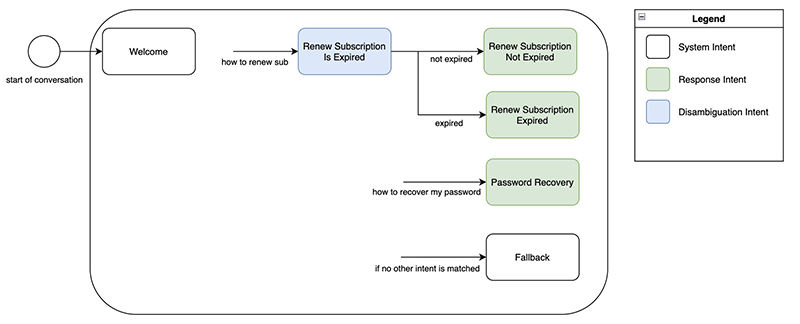

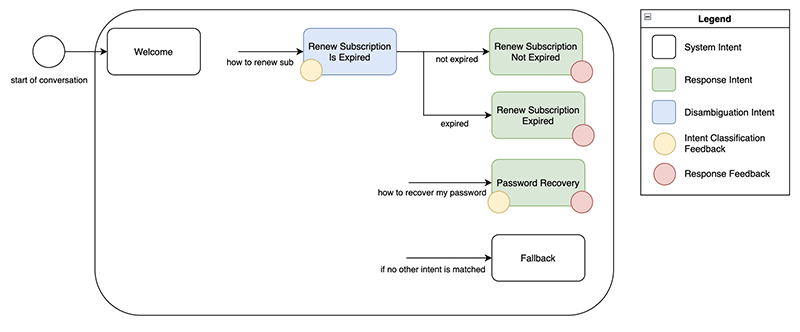

We can structure the collection of user feedback to solve this type of problem over time and with little effort. Let’s consider this simpler scenario, where the intents available at the start of the conversation are Renew Subscription Is Expired, Password Recovery, and Fallback.

When one intent among Renews Subscription Is Expired and Password Recovery is matched, the chatbot should provide their corresponding answer and then ask if that was what the user meant. For example, if the user says “How do I renew my subscription”, the response of the intent Renew Subscription Is Expired should be something like “Do you want to renew your subscription? If so, select the option that best describes your case” with a multiple-choice answer made of “Yes, my subscription is already expired”, “Yes, my subscription is not expired yet”, and “No, that’s not what I meant”. If the user selects one of the first two choices, we can deduce that intent classification was successful and save a positive implicit feedback along with the user’s text that led to the match of the Renews Subscription Is Expired intent. If the user selects the third choice, the chatbot should trigger a new intent that manages these misunderstandings (or the Fallback intent) and save a negative implicit feedback, along with the user’s previous text. By saving the positive and negative feedbacks in this way, you have an organized collection of feedback divided by intent and type of feedback, where you can only analyze the step of the conversation that led to the feedback instead of the whole conversation. This will save a lot of time and headaches for anyone analyzing feedback to improve intents classification.

Let’s see another example. If the user writes “How can I recover my password”, the chatbot should match the intent Password Recovery and answer with “To recover your password, do this […] Did I solve your problem?” with possible choices “Yes” (positive feedback) and “No, I meant something else” (negative feedback).

The users’ sentences that led to positive feedbacks are potential new training phrases already validated. Keep in mind that adding too many training phrases to one intent may improve its performance but reduce its maintainability, since checking 100 sentences is slower than checking 30. I suggest considering a tradeoff of these two aspects: try to keep not more than 40 training phrases and at the same time adequately cover the spectrum of possible requests made by users with dissimilar training phrases.

Since the goal of this type of feedback is to improve the intent classification by providing new and better training phrases, we’ll call it Intent Classification Feedback.

Intent classification errors are not the only kind of mistakes that can be addressed using feedbacks, let’s see why.

How to structure feedback collection to improve the intent responses

The response provided with an intent may be not enough for certain users to solve their problems, even if it may seem strange to whoever writes the intent answers as they try to be as clear as possible. It is similar to what happens with product usability tests: who has developed a product has had the time to develop a conceptual model that makes it easy to carry out any action within it and struggles to identify with a user who sees the product for the first time. This happens for those who have the task of writing the answers that the chatbot will give as well: they know the service so well that they write complete answers at first sight, but on which new users cannot orient themselves.

So, how can we gather feedback on the quality of the chatbot responses and improve them? Again, by organizing feedback in an appropriate way. Let’s go back to our previous example.

It makes little sense to check the quality of the responses of the Disambiguation Intents, as they have short answers and are in the form of a question. We’ll focus on Response Intents.

If the user asks “How can I recover my password”, the chatbot should match the Password Recovery intent and answer with “To recover your password, do this […] Did I solve your problem?” with three possible choices: “Yes”, “No, I couldn’t recover my password with your instructions”, and “No, I meant something else”. The first answer is both a positive Intent Classification Feedback and a positive feedback on the quality of the response text. The second answer is a negative feedback on the quality of the response, and the third answer is a negative Intent Classification Feedback. We can define Response Feedback as feedback on the quality of the answer associated with a Response Intent.

Negative Response Feedbacks should always lead to a handover to human agents if it’s possible. Their conversations must then be analyzed to understand why the intent response wasn’t good enough.

Final suggested intent architecture with feedback collection

Overall, this is how I suggest your intent architecture to look like.

As a recap:

Free text input is preferable to restrict the number of plausible correct responses for the user’s request and to gather data about what the users are asking.

Multiple-choice input is preferable to find the right answer from a shortlist of possible answers and to gather feedback.

Response Intents should directly solve the user’s request with a response. They can do it in multiple steps, especially if they need to ask the user for data.

Disambiguation Intents have the goal of finding the right response from a shortlist of similar options, that can be Response Intents or other Disambiguation Intents. They provide multiple-choice answers and solve the problem of intents with similar training phrases.

The welcome intent suggests the user to say with free text what his/her request is. The goal of intent classification is then to narrow down the user’s request to a Response Intent or to a Disambiguation Intent.

Intent Classification Feedbacks have the goal of improving intent classification by gathering organized and concise feedbacks that provide new training phrases. They are gathered right after intent classification is performed, in both Disambiguation and Response Intents.

Response Feedbacks have the goal of improving the responses associated with Response Intents, by gathering organized and concise feedbacks that provide new perspectives on the users’ problems. They are gathered right after a Response Intent is matched.

Bonus tips

Here is some general advice that appears to be beneficial in most chatbot projects. It is not intended as a complete list, as it could fill another entire article.

It is perfectly normal not to be able to manage 100% of contacts with your chatbot

There are interactions too complex for a computer to handle or rare enough that it’s not worth teaching it to do. In these cases, hand off to a human agent. A chatbot can greatly improve efficiency, even if it just handles the first, mechanical, part of a conversation. Aim at making the chatbot manage that 70% of repetitive and simple contacts, while leaving human agents to manage the remaining 30% of complex and rare contacts (the percentages may greatly vary depending on the industry).

Don’t make the chatbot repeat itself

Users hate when they obtain identical responses multiple times, it makes it seem like the conversation is going nowhere. Avoid loops whenever possible in your intent graph and implement different responses for the same intent to avoid repeating the exact same sentences.

Address angry users

If you have the technology to understand your users’ emotions (i.e., sentiment analysis on text), use it and address angry users with something like “I’m sorry but I’m not understanding, would you like to talk to a real person?”

If you are starting a new chatbot project: start small, test, monitor, tune, and iterate

Start by implementing no more than 15 response intents, perform user tests, and go live. Focus on managing a few reasons for contact, but well. Then, monitor Intent Classification Feedbacks and Response Feedbacks on your dashboard, review the users’ queries that didn’t work for the intents with the highest volumes (i.e., work where you have the highest ROI), and improve your chatbot. Improve the chatbot through data-driven decisions, don’t rely only on your intuition.

Conclusion

Like any project that can potentially grow in size and complexity, a good intent architecture in a chatbot project is essential to keep it maintainable, monitor its performance clearly, and systematically improve it over time. Consider this guide as an aid in managing the complexities that arise as a chatbot grows, although I think that a good conceptual model of the architecture of a chatbot can save a lot of headaches even in smaller projects.

This article was originally published on Towards Data Science and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more technical education.

Leave a Reply

You must be logged in to post a comment.