The “AI for Social Good” umbrella

“AI for social good”. We increasingly see related initiatives by organisations like Google and Microsoft, conferences, reports and workshops in major “AI” conferences like Neurips, ICRL, and ICML. However, AI for good has become an arbitrary term.

Not only AI is a conflated term (for which we can write books about) but the soup term “AI for social good” creates even more confusion about the goals and methodologies of the endeavor as well as its values and stakeholders. The lack of precision from the perspective of technical practitioners tells us nothing about what good is, for whom and in what context and how it relates to other research domains.

To leverage this incredible outpouring of interest we are seeing in an efficient way, and avoid virtue singling and techno-solutionism I argue that we need more precise and contextual ways to discuss our work, develop our practice and learn lessons from similar movements. This is not for the purpose of closing off the community but rather to form expertise from the challenge owners to defining, situating and tracking our progress and values.

Want to get more content and education about AI for social good? Subscribe below to receive relevant updates.

Tech> Data> AI for good in Context

The “AI for good” is not a new “conversation”. It builds on previous techno-optimistic movements associated with the use of our latest technological advancement to solve societal challenges namely the Tech for Social Good movement. “Tech for social good” broadly uses digital technology to tackle some of the world’s toughest challenges.

While the definitions vary, some of these organisations call it Digital Social Innovation, Social Tech, Civic Tech, Responsible Tech, Technology for social justice, Tech for International Development, Tech for Sustainable Development and Tech For Social Good. Cassi Robinson takes us through the historical roots of the movement which became more organised around 2008. Civic tech at that time was more focussed on government, governance and democratic processes — not so much on wider societal problems like education, poverty, inequality, sustainability, ageing, etc.

It slowly branched off to “data for social good” and initiatives like DataKind, Bayes Impact, and Data Science for Social Good, AI4ALL, hack4impact, and uptake.org flourished and fostered new communities of data/ML aficionados working on several societal challenges.

People in the tech for good community such as Tech For Good Global, Bethnal Green Ventures, CAST, Nominet Trust, Doteveryone, Nesta, Good Things Foundation, Comic Relief as well as numerous researchers and entrepreneurs (myself included) have contemplated about the definitions, principles, challenges, and theories of change. Does technology give power and agency to people? Is it made responsibly? Whose voice is excluded from the conversation?

Similarly, today we are seeing conversations around the applications and implications of Machine Learning. Is it fair? Unbiased? Transparent? Accountable? At this point in time, it’s worth re-examining the value propositions and practices of “AI for social good”, as well as looking back for lessons. This is because:

- Machine learning and data science methodologies are maturing and they become more central to meeting grand social challenges and supporting social innovation, philanthropy, international development, and humanitarian aid.

- There is still a disconnect between the scientific and tech communities and the social sector (charities, civil society, public services, etc) i.e those with the good questions, often the social sector and people with lived experience are not included in the conversations.

- The work of researchers, designers, ethnographers, economists, ML scientists, data scientists, policymakers, etc is still fragmented and there is a need for different lenses to be combined in order to have a systemic view of intractable international challenges.

- Social good looks different in different contexts and we know that. The more we continue building ML systems with the developed world in mind, — as we currently do-, the more the technology will encode the infrastructure and cultural conditions of developed regions and only assume the needs of other peoples internationally, hence either excluding their voices or even blatantly biasing against different populations.

- The public still doesn’t understand the ways AI can be used for “good” and they aren’t necessarily aware of what is available to them and funders who want to resource it don’t necessarily understand its impact.

- It’s important to ensure that more diverse talent comes into the field.

“AI for good”, what?

Artificial intelligence (data and learning algorithms), while not a silver bullet, could contribute to tackling some of the world’s most challenging social problems.

Narrowing down the term “AI for social good” we can include the set of methods, mechanisms, and tools that address a) Contextual problems that are critical for the region of interest b) These problems differ from solutions in other regions c) The proposed ML solution effectively addresses these differences by some measurable outcome d) and the proposed solutions substantially use ML.

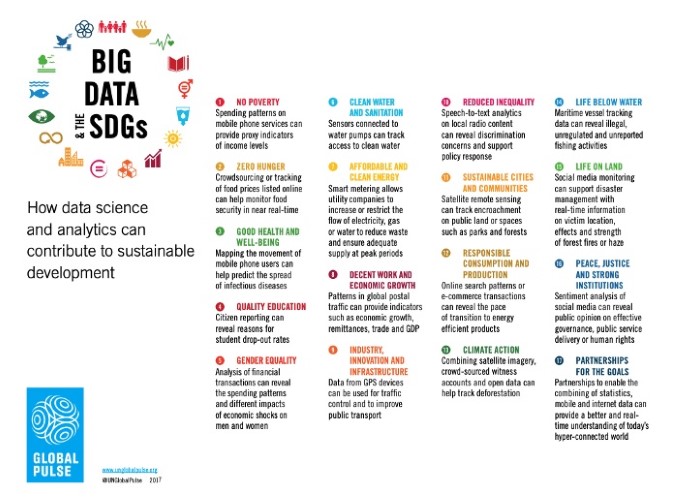

In terms of application areas, one way to frame the impact of “AI for social good” could be through the lens of the United Nations Sustainable Development Goals (SDGs). These are a set of 17 objectives that must be addressed in order to bring the world onto a more equitable, prosperous, and sustainable path.

These global goals are: No Poverty, Zero Hunger, Good Health and Wellbeing, Quality Education, Gender Equality, Clean Water and Sanitation, Affordable and Clean Energy, Decent Work and Economic Growth, Industry, Innovation and Infrastructure, Reduced Inequality, Sustainable Cities and Communities, Responsible Consumption and Production, Climate Action, Life Below Water, Life on Land, Peace and Justice Strong Institutions, Partnerships to Achieve the Goal.

These SDGs have 169 targets and 232 indicators and you can explore them here.

I think this could be a useful framework to examine global challenges and social impact as it is relatively well thought through at an international level and developed/refined by hundreds of multidisciplinary experts. Also, it’s already being integrated into national and translational policies as well as referenced in academia.

Unique ML Research Challenges in International Sustainable Development

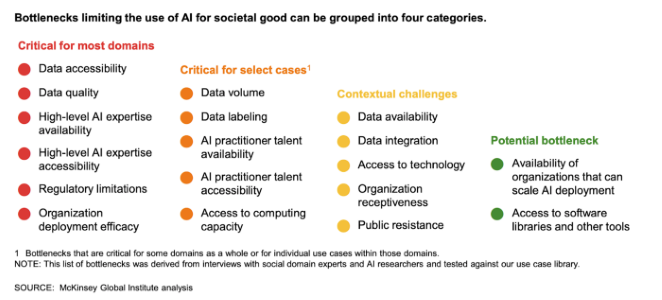

There are various bottlenecks limiting the use of AI for social good, as shown below.

Unfortunately, the use of AI to address societal challenges is particularly hard in the Global South. There are many reasons for that, apart from the longstanding structural socioeconomic and political conditions. For example, governments in many developing countries are not producing enough data to help them assess their challenges and come up with workable solutions and in many cases, there is corruption and limited computational capacity, internet accessibility and technical, human and financial resources to implement any such project.

Additionally, I frequently see data science and ML practitioners who, despite their good will, are playing the saviors of developing nations with their newly developed method “patches”. While social impact might be a consideration, one can see that the authors are more interested in publishing in the latest ML conference and are in the lookout for a problem to their solution, rather than primarily solving the problem at hand. And while I acknowledge their good intentions, I find this rather disingenuous.

Additionally, we should go beyond the narrative that the environmental conditions of the global South are roadblocks for research and beyond only testing or applying our solutions in developing countries. Why not also develop contextual research agendas coming from the unique challenges of non-Western countries, that can be also valuable to the whole research community? This is what Arteaga et al (2018) argue and provide us with several technical challenges and suggestions of ML research problems that they could motivate such agendas, like:

- Learning with Small Data, developing new models and general optimization routines that specifically concentrate on small data settings

- Learning from Multiple Messy Datasets, creating methods that can integrate multiple diverse datasets, advancing the study of fields such as bootstrapping, data imputation, and machine fairness.

- Intelligent Data Acquisition and prioritization of which new data to collect (active learning and active feature-value acquisition models that can be deployed successfully in settings with limited infrastructure)

- Transfer Learning for Low-Resource Languages

- Learning with Limited Memory and Computation which relates to research on ML in embedded systems as well as to recent advances in knowledge distillation

- Novel Compression Algorithms for video, text, and voice data

- Effective Decision Support Systems to help scale public services and avoid corruption by detecting patterns of fraud in complex data and ensuring robust systems

- Study fairness, accountability, bias and transparency in decision making systems using machine learning

- Novel research in the growing subfield of ML and causality that would guide policy decisions.

Rethinking purpose and practice

As I’m seeing those initiatives pop up, I cannot help but notice that there isn’t a clear social purpose or strong values that hold it all together. While it might not be helpful to define the elusive term “AI for social Good” any more than I did above, I think it’s worth examining the process and practice by which we come up with those interventions. Below, I will lay out some thoughts and recommendations for practitioners and researchers with regards to “AI for social good” practice:

- Before you make a claim that your method/work can be used to address a certain societal challenge, question those social problems and why do they exist, what are the ecosystems and actors who’re working on them. Take your time to develop a critical definition.

- Ask who is causing the problem, and who is affected by it? When did the problem first occur, or when did it become significant? Is this a new problem or an old one? How much, or to what extent, is this problem occurring? How many people are affected by the problem? How significant is it? The reason for this reflection is that if we are not mindful of the problem’s dynamics as well as power and information asymmetries we might be trying to answer the wrong questions.

- It’s better to define the problem in terms of needs, and not solutions (i.e. where can I apply my fancy method?). If you define the problem in terms of possible solutions, you’re closing the door to other, possibly more effective solutions and you might be wasting your time.

- Examine any counterarguments or related controversies and give a hard thought on the potential malicious uses of your implementation. Clearly, state them in your paper. Think about your data and models (see model cards for model reporting, datasheets for datasets).

- Consider creating maps of the multiple data ecosystems/stakeholders that will help you understand and explain where and how the use of data creates value. A data ecosystem map will identify the key data stewards and users, the relationships between them and the different roles they play.

- However, move away from a data extraction logic to data empowerment, that involves and supports people in the responsible collection and use of data.

- Consider combining and triangulating data and methods, moving away from single data concepts or a method, e.g. a single data source like social media data, government data, mobile phone data, to combining data sources and addressing limitations of individual data types.

- Consider experimenting with different modes of data pooling and sharing such as data collaboratives, data trusts, and data commons.

- Engage in sincere international collaborations. In many international research endeavors, local scientists’ common roles are in collecting data and fieldwork while foreign collaborators are shouldered a significant amount of analytical science. This is echoed in a 2003 study of international collaborations in at least 48 developing countries which suggested that local scientists too often carried out fieldwork in their own country for the foreign researchers. This process is not building local capacity.

- Instead, provide the tools and resources for others to create a similar model or analysis. In some instances, this might involve codifying what has been achieved by a project by creating specific toolkits or curricula that can be used by others in different countries and contexts.

- Research should be open and reproducible. This cannot be fully unpacked here, but it means that at least a) research shouldn’t be paywalled but instead published in a public archive like arXiv b) code for the experiments and models should be under open license i.e. MIT and published on GitHub c) data should be open under i.e a Creative Commons license.

- Consider publishing in different academic journals where your ML work can be groundbreaking and would indeed transfer new knowledge to another field. I frequently see papers in venues like Neurips and I think that these would be also great for journals in Economics or HCI.

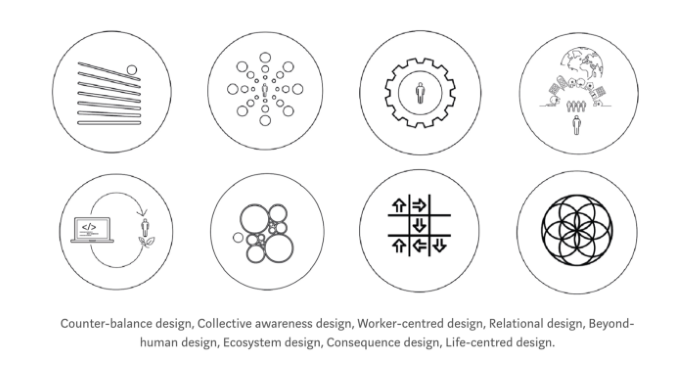

- Move away from a very data-centric view to the role of power, politics and institutional context and experiment with other approaches such as behavioral science and collective intelligence. Move away from human-centered design and reach out to your colleagues in sociology, anthropology, history, ethnography, and cultural studies who have been thinking for a long time about the problem you are trying to solve. Some other approaches to consider are laid out here by Cassie Robinson:

Regardless of the different terms we use, it’s time to link our activity and our learning and to take up the responsibility for the deep social, institutional and personal change needed. Our new tools come with new affordances and associated risks. We should aim to be more precise and keep the challenges we identify and their scientific and sociotechnical basis in close proximity through deep collaboration, dialogue, and serious engagement.

*Opinions expressed above are solely my own and do not necessarily reflect the views or opinions of my employer.

This article was originally published on Medium and re-published to TOPBOTS with permission from the author.

Looking to apply AI for social good? Sign up for more education.

We’ll let you know when we release more educational articles covering beneficial applications of AI for society.

Leave a Reply

You must be logged in to post a comment.